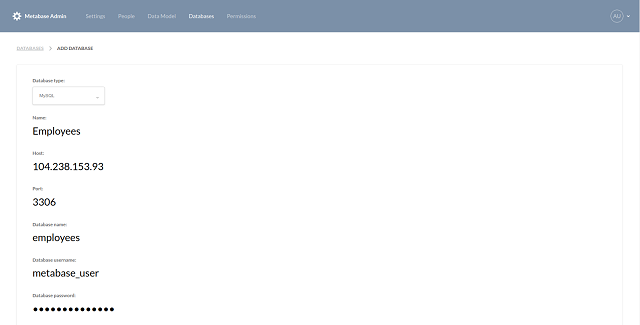

Presto allows querying data where it lives, including Hive, Cassandra, relational databases or even proprietary data stores. A single Presto query can combine data from multiple sources, allowing for analytics across your entire organization. Presto is targeted at analysts who expect response times ranging from sub-second to minutes. Now you can easily set up Metabase for your company without being an engineer with Metabase Cloud. Metabase Cloud is a tool in the Business Intelligence category of a tech stack. Metabase Cloud is an open source tool with GitHub stars and GitHub forks. See Metabase installation. This page describes a way to connect to Treasure Data Presto from Metabase with Mac OS X application. Setup Presto connection. Install Metabase. Navigate to the Databases page on the Admin menu. Click Add database.

I had a SQL query that failed on one of the Presto 0.208 clusters with the “Query exceeded per-node total memory” (com.facebook.presto.ExceededMemoryLimitException) error. How can you solve this problem? I will consider a few possible solutions, but firstly let’s review the memory allocation in Presto.

Memory Pools

Presto 0.208 has 3 main memory areas: General pool, Reserved pool and Headroom.

All queries start running and consuming memory from the General pool. When it is exhausted, Presto selects a query that currently consumes the largest amount of memory and moves it into the Reserved pool on each worker node.

To guarantee the execution of the largest possible query the size of the Reserved pool is defined by query.max-total-memory-per-node option.

But there is a limitation: only one query can run in the Reserved pool at a time! If there are multiple large queries that do not have enough memory to run in the General pool, they are queued i.e. executed one by one in the Reserved pool. Small queries still can run in the General pool concurrently.

Headroom is an additional reserved space in the JVM heap for I/O buffers and other memory areas that are not tracked by Presto. The size of Headroom is defined by the memory.heap-headroom-per-node option.

The size of the General pool is calculated as follows:

There is an option to disable the reserved pool, and I will consider it as well.

Initial Attempt

In my case the query was:

and it failed after running for 2 minutes with the following error:

The initial cluster configuration:

With this configuration the size of the Reserved pool is 10 GB (it is equal to query.max-total-memory-per-node), the General pool is 35 GB (54 – 10 – 9):

The query was executed in the General pool but it hit the limit of 10 GB defined by query.max-total-memory-per-node option and failed.

resource_overcommit = true

There is the resource_overcommit session property that allows a query to overcome the memory limit per node:

Now the query ran about 6 minutes and failed with:

It was a single query running on the cluster, so you can see that it was able to use the entire General pool of 35 GB per node, although it still was not enough to compete successfully.

Increasing query.max-total-memory-per-node

Since 35 GB was not enough to run the query, so let’s try to increase query.max-total-memory-per-node and see if this helps complete the query successfully (the cluster restart is required):

With this configuration the size of the Reserved pool is 40 GB, the General pool is 5 GB (54 – 40 – 9):

Now the query ran 7 minutes and failed with:

It is a little bit funny but with this configuration setting resource_overcommit = true makes things worse:

Now the query quickly fails within 34 seconds with the following error:

resource_overcommit forces to use the General pool only, the query cannot migrate to the Reserved pool anymore, but the General pool is just 5 GB now.

But there is a more serious problem. The General pool of 5 GB and the Reserve pool of 45 GB allows you to run larger queries (up to 45 GB per node), but significantly reduces the concurrency of the cluster as the Reserved pool can run only one query at a time, and the General pool that can run queries concurrently is too small now. So you can more queued queries waiting for the Reserved pool availability.

experimental.reserved-pool-enabled = false

To solve the concurrency problem and allow running queries with larger memory Presto 0.208 allows you to disable the Reserved pool (it is going to be default in future Presto versions):

Now you can set an even larger value for the query.max-total-memory-per-node option:

With this configuration all memory except Headroom is used for the General pool:

But the query still fails after running for 7 minutes 30 seconds:

So there is still not enough memory.

experimental.spill-enabled=true

Since we cannot allocate more memory on nodes (hit the physical memory limit), let’s see if the spill-to-disk feature can help us (the cluster restart is required):

Metabase Presto

Unfortunately this does not help, the query still fails with the same error:

The reason is that not all operations can be spilled to disk, and as of Presto 0.208 COUNT DISTINCT (MarkDistinctOperator) is one of them.

So looks like the only solution to make this query work is to use compute instances with more memory or add more nodes to the cluster.

In my case I had to increase the cluster to 5 nodes to run the query successfully, it took 9 minutes 45 seconds and consumed 206 GB of peak memory.

Checking Presto Memory Settings

If you do not have access to jvm.config and config.properties configuration files or server.log you can query the JMX connector to get details about the memory settings.

To get the JVM heap size information on nodes (max means -Xmx setting):

To get the Reserved and General pool for each node in the cluster:

Metabase Presto Plus

When the Reserved pool is disabled the query returns information for the General pool only:

Conclusion

- You can try to increase

query.max-total-memory-per-nodeon your cluster, but to preserve the concurrency make sure that the Reserved pool is disabled (this will be default in Presto soon). - Enabling the disk-spill feature is helpful, but remember that not all Presto operators support it.

- Sometimes only using instances with more memory or adding more nodes can help solve the memory issues.

Metabase Presto Vs

Metabase连接mysql5.6.16-log版本数据库报错:Unknown system variable ‘session_track_schema’

情况一:Metabase启动数据库为mysql5.6.16

启动直接报错,启动失败

Metabase在启动时报错session_track_schema,日志文件如下:

情况二:添加数据源mysql5.6.16

如果数据源是这个版本也会同样的问题:

这个问题是数据库版本导致的,你看一下详细查看Metabase github的issue:9483的讨论

关于这个问题Metabase上的issue特别的多,而且容易看晕,最后终于在找到了一个解决办法,亲测可用

最终解决方案,你可以看一下(见issue:9954):

分析原因-session-track-schema

至于MariaDB是MySQL 的一个 branch,还是MariaDB 是 MySQL 的一个fork先不讨论,不过它俩似乎一直就存在兼容性的问题,Metabase MySQL/MariaDB driver是mariadb-java-client这个包。

Metabase不支持Alibaba RDS MySQL 5.6.16-log,因为在0.32.0版本中更改为MariaDB Connector/J,因为旧MySQL不支持session-track-schema。

后来发现就是这个5.6.16-log这个小版本有问题,目前其他的版本未发现存在问题。

解决办法-mariadb-java-client包

首先确定Metabase使用的MySQL/MariaDB driver版本,到project下面

需要下载源码包:mariadb-java-client-2.3.0-sources.jar(Metabase当前版本用的这个版本)

编译完替换就重新启动即可,也可以直接下载我编译完的 AbstractConnectProtocol.class