Feature scaling is a method used to normalize the range of independent variables or features of data. In data processing, it is also known as data normalization and is generally performed during the data preprocessing step.

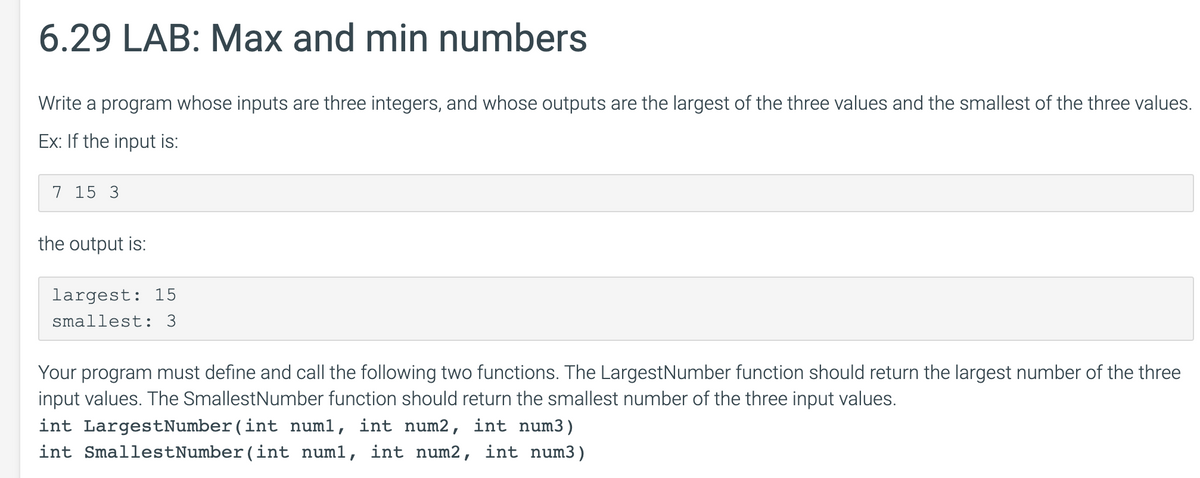

The maxlength attribute specifies the maximum number of characters allowed in the element. MAX and MIN to Find Values. Written by co-founder Kasper Langmann, Microsoft Office Specialist. Two of the more useful yet simple functions that Microsoft Excel offers are ‘MIN’ and ‘MAX’. These two functions find the smallest and largest value in an array. It could be an array of constants (literal values) or an array of cells (range. 4: Python Program to find the position of min and max elements of a list using min and max function. Allow user to enter the length of the list. Next, iterate the for loop and add the number in the list. Use min and max function with index function to find the position of an element in the list. Print the results.

Motivation[edit]

Since the range of values of raw data varies widely, in some machine learning algorithms, objective functions will not work properly without normalization. For example, many classifiers calculate the distance between two points by the Euclidean distance. If one of the features has a broad range of values, the distance will be governed by this particular feature. Therefore, the range of all features should be normalized so that each feature contributes approximately proportionately to the final distance.

Another reason why feature scaling is applied is that gradient descent converges much faster with feature scaling than without it.[1]

It's also important to apply feature scaling if regularization is used as part of the loss function (so that coefficients are penalized appropriately).

Methods[edit]

Input Min Max Html

Rescaling (min-max normalization)[edit]

Also known as min-max scaling or min-max normalization, is the simplest method and consists in rescaling the range of features to scale the range in [0, 1] or [−1, 1]. Selecting the target range depends on the nature of the data. The general formula for a min-max of [0, 1] is given as:

where is an original value, is the normalized value. For example, suppose that we have the students' weight data, and the students' weights span [160 pounds, 200 pounds]. To rescale this data, we first subtract 160 from each student's weight and divide the result by 40 (the difference between the maximum and minimum weights).

To rescale a range between an arbitrary set of values [a, b], the formula becomes:

where are the min-max values.

Mean normalization[edit]

W3schools Input Number Min Max

where is an original value, is the normalized value. There is another form of the means normalization which is when we divide by the standard deviation which is also called standardization.

Standardization (Z-score Normalization)[edit]

In machine learning, we can handle various types of data, e.g. audio signals and pixel values for image data, and this data can include multiple dimensions. Feature standardization makes the values of each feature in the data have zero-mean (when subtracting the mean in the numerator) and unit-variance. This method is widely used for normalization in many machine learning algorithms (e.g., support vector machines, logistic regression, and artificial neural networks).[2][citation needed] The general method of calculation is to determine the distribution mean and standard deviation for each feature. Next we subtract the mean from each feature. Then we divide the values (mean is already subtracted) of each feature by its standard deviation.

Where is the original feature vector, is the mean of that feature vector, and is its standard deviation.

Scaling to unit length[edit]

Another option that is widely used in machine-learning is to scale the components of a feature vector such that the complete vector has length one. This usually means dividing each component by the Euclidean length of the vector:

In some applications (e.g., histogram features) it can be more practical to use the L1 norm (i.e., taxicab geometry) of the feature vector. This is especially important if in the following learning steps the scalar metric is used as a distance measure.[why?]

Application[edit]

In stochastic gradient descent, feature scaling can sometimes improve the convergence speed of the algorithm[2][citation needed]. In support vector machines,[3] it can reduce the time to find support vectors. Note that feature scaling changes the SVM result[citation needed].

See also[edit]

- fMLLR, Feature space Maximum Likelihood Linear Regression

References[edit]

- ^Ioffe, Sergey; Christian Szegedy (2015). 'Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift'. arXiv:1502.03167 [cs.LG].

- ^ abGrus, Joel (2015). Data Science from Scratch. Sebastopol, CA: O'Reilly. pp. 99, 100. ISBN978-1-491-90142-7.

- ^Juszczak, P.; D. M. J. Tax; R. P. W. Dui (2002). 'Feature scaling in support vector data descriptions'. Proc. 8th Annu. Conf. Adv. School Comput. Imaging: 25–30. CiteSeerX10.1.1.100.2524.

Html Number Input Min Max

Further reading[edit]

- Han, Jiawei; Kamber, Micheline; Pei, Jian (2011). 'Data Transformation and Data Discretization'. Data Mining: Concepts and Techniques. Elsevier. pp. 111–118.

Min Input Max Output

External links[edit]